2020-04-17

This blog post was originally published as a Towards Data Science article here.

In this blog post, I am going to investigate the serial (aka temporal) dependence phenomenon in random binary sequences.

Random binary sequences are sequences of zeros and ones generated by random processes. Primary outputs generated by most random number generators are binary. Binary sequences often encode the occurrence of random events:

In many of those situations, it is necessary to ensure that the tracked events occur independently of each other. For example, the occurrence of an incident in a production system should not make the system more incident prone. For that, we need to take a close look at the dependence structure of the binary sequence under consideration.

In this blog post, I am going to develop and test a scoring method for quantifying the dependence strength in random binary sequences. The Meixner Dependency Score is easy to implement and is based on the orthogonal polynomials associated with Geometric distribution.

After reading through this blog post, you will know:

Given a sequence of random variables taking values in the set and a probability , I would like to investigate the serial dependencies between the elements of This investigation should be based on a sample drawn from , i.e. a finite sequence of zeros and ones This is a very difficult and deep problem, and in this blog post I am going to focus on collecting evidence to support or reject the following two basic hypotheses:

If we assume that the elements of are independent samples from a fixed Bernoulli random variable , then we can estimate the probability by calculating the average of This is because the expectation of is

To set an appropriate context for the serial dependence detection problem, let me remark that random binary sequences are often associated with discreet observations of a system with two states. We say that “an event” occurred at time , if

How can we check whether our events occur independently of each other and gather evidence that there is no serial dependence between the event times?

We often tend to see serial dependence in situations where there is none. Typical examples are the “winning or lucky streaks” experienced by gamblers in casinos.

To rigorously investigate the question of serial dependence in the sequence based on the observation , we can look at the distribution of waiting times between the events. For example the sequence

[0, 1, 1, 0, 0, 1, 0, 0]yields the following sequence of waiting times

[1, 3].Note that the waiting time calculation discards the initial and trailing zeros in the event sequence

To formally define the waiting time sequence based on , consider the sequence of indices , such that for each we have We set

For an i.i.d. sequence of Bernoulli random variables, the sequence of waiting times consists of i.i.d. random variables with the geometric distribution. Let’s have a closer look at its properties.

The probability mass function (PMF) of the geometric distribution with parameter is given by

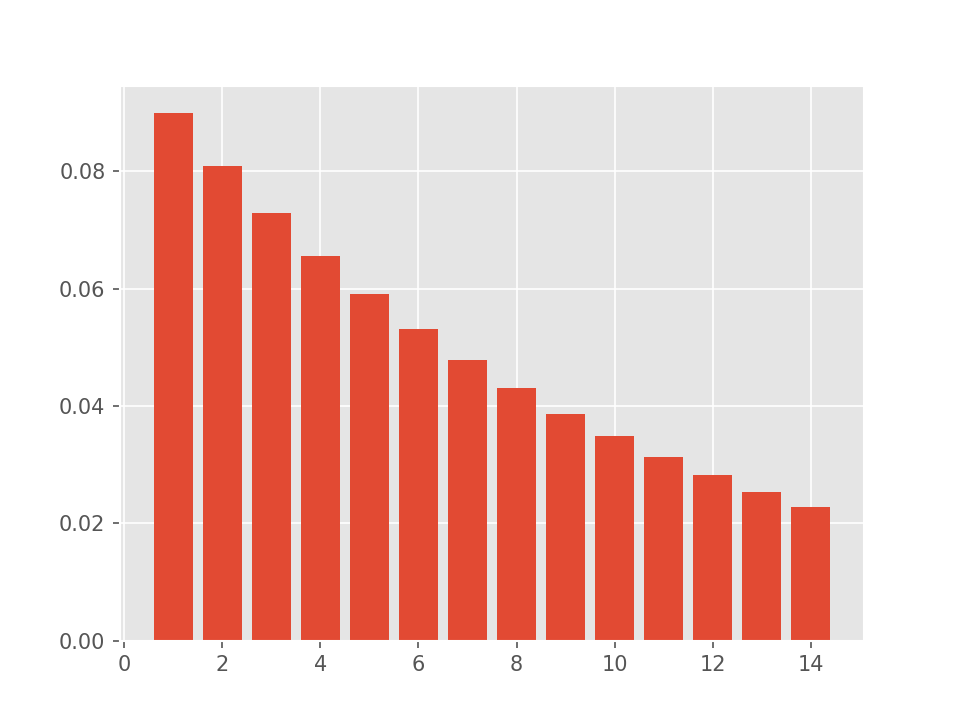

The PMF of the geometric distribution with the parameter looks as follows.

One way of test whether a waiting times sequence follows the geometric distribution is to look at the orthogonal polynomials generated by that distribution.

A family of orthogonal polynomials is intimately tied to every probability distribution on For any such distribution, we can define a scalar product between (square-integrable) real-valued functions as

where is a random variable with distribution For a geometric distribution, the above scalar product takes the form

We say that the functions and are orthogonal (with respect to ) if and only if

Finally, a sequence of polynomials is called orthogonal, if and only if for all , and each has degree As a consequence, we have for This is the tool I am going to use to check whether a given follows a geometric distribution.

The family of orthogonal polynomials corresponding to the geometric distribution is a special case of the Meixner family derived from the negative binomial distribution (a generalization of the geometric distribution). The members of the Meixner family satisfy the following handy recursive relation:

with and This relation is used to calculate the sequence Also, note that depends on the value of the parameter

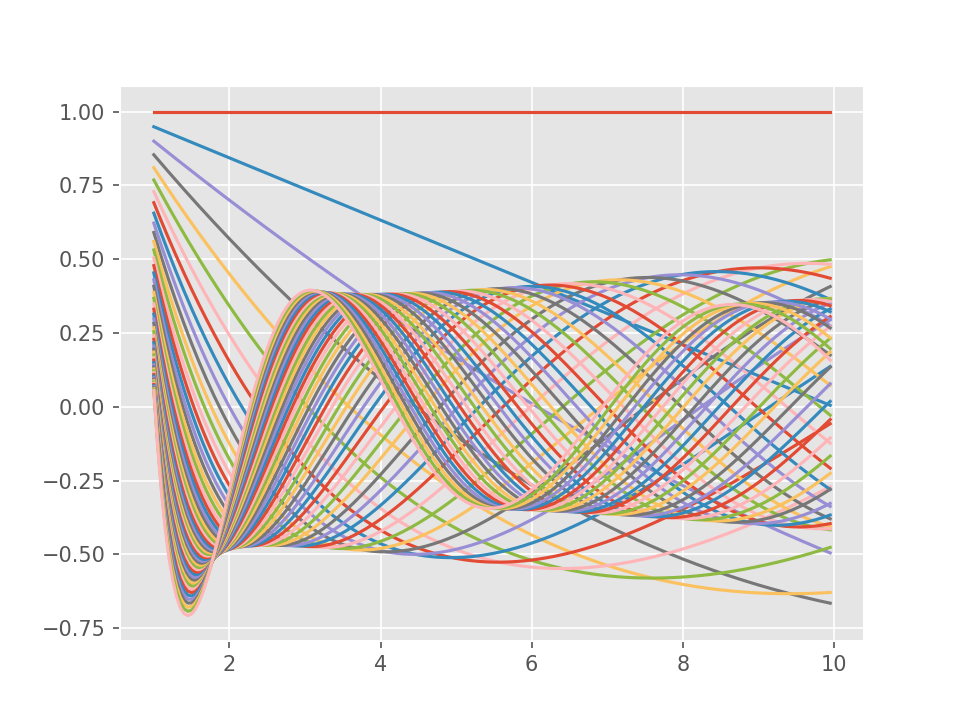

In the companion python source code 1,

the function meixner_poly_eval is used to evaluate Meixner

polynomials up to a given degree on a given set of points. I used this

function to plot the graphs of these polynomials up to degree 50.

As mentioned above, for every polynomial in the equation

holds, if and only if and

This relation can be used to test whether a given sample of waiting times belongs to the geometric distribution with parameter We are going to estimate the expectations and use the estimates as scores: Values close to zero can be interpreted as evidence speaking for geometric distribution with parameter If the values are far from zero, this is a sign that we need to revisit our assumptions and possibly discard the i.i.d. hypothesis about the original event sequence. Note that significant deviations from zero can also occur if the events are independent but the true probability differs significantly the putative This can easily be tested as described earlier.

In order to arrive at a single number (a score) that quantifies the degree of serial dependence in the sense developed above, we will define the Meixner dependency score (MDS) as a mean of expectation estimates for the first Meixner polynomials evaluated at a sample of waiting times :

The idea of using orthogonal polynomials to test the dependence structure assumptions in binary sequences has its roots in the method of moments inference and was developed in the financial literature to backtest Value-at-Risk models. In this blog post I adapt the approach outlined in Candelon et al. See the References section below.

To find out whether the procedure devised above has a chance to work in practice, I am going to test it using synthetically generated data. This type of a testing procedure is commonly known as Monte-Carlo (MC) study. It doesn’t replace tests with real-world data, but it can help evaluate a statistical method in a controlled environment.

The experiment we are going to perform consists of the following steps:

Let’s start with a simple case of simulating an i.i.d. sequence of Bernoulli random variables with success probability This can be used as a sanity check and to test whether our implementation of the procedure is correct.

For the following experiment we set and simulate samples from

For a model with a simple form of serial dependence, we select the probabilities and set the distributions of random variables in the sequence as follows. Let be Bernoulli with success probability The distributions of for are conditional on :

In order to compare this model with an i.i.d. Bernoulli model with the success probability , we need to set and such that the unconditional distributions of are

In other words, for a given , we are looking for a , such that the random binary sequence defined above satisfies This binary sequence can be represented as a simple Markov chain with the state space , the transition probability matrix given by

and the initial distribution The marginal distribution of is given by This basic result can be found in any book about Markov Chains (see the References section below). The task of finding a such that is as close to as possible, can be easily solved with an off-the-shelf optimization algorithm such as BFGS.

For example, the above procedure quickly yields for and

Let’s test the dependency scoring algorithm for the following values and An MC evaluation of the Meixner polynomials leads to the following Meixner dependency scores:

| p1 | p2 | MDS |

|---|---|---|

| 0.5 | 0.5 | 0.083 |

| 0.4 | 0.24 | 0.194 |

| 0.3 | 0.43 | 0.382 |

| 0.2 | 0.62 | 0.583 |

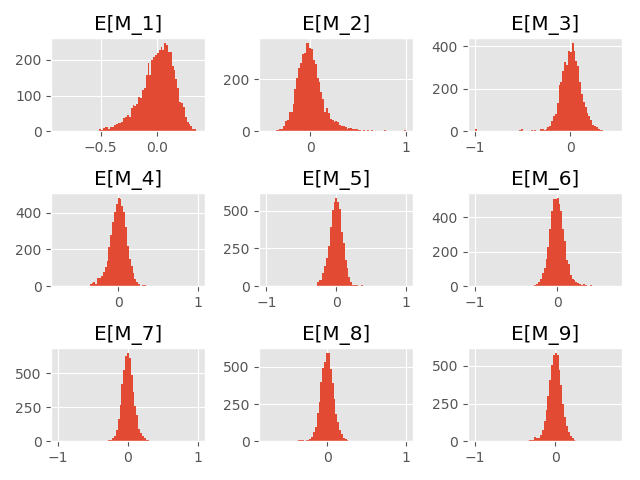

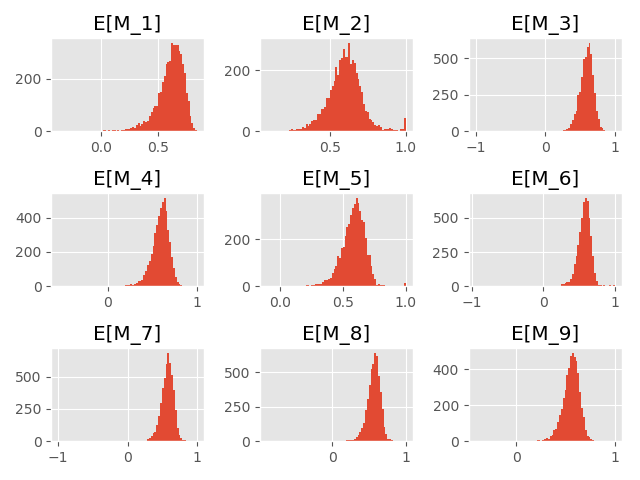

Again, 5000 simulations of samples of length 1000 yield the following histograms for the expectation estimates.

As we can see, the histograms are shifted slightly to the left.

All the results and plots presented in this blog post have been generated using the following Python script: meixner.py

In this blog post, we learned about the concept of serial dependence in binary sequences. We implemented a serial dependence detection method, the Meixner Dependency Score, based on the Meixner orthogonal polynomials and tested its performance using a simple Markov chain model.

An important financial use case for dependency testing is the analysis of the Value-at-Risk exceedance event sequence generated by a market risk model. Market Risk models are widely used in the banking industry for regulatory capital requirement calculations and in the asset management industry for portfolio construction and risk management.

The Value-At-Risk (Var) is a quantile of the loss distribution for a financial asset price over a fixed time horizon. For example, an investor holding a long position in gold may be interested in the 95% VaR over a one-day time horizon for the gold price in USD. For a well-calibrated VaR model, the extreme losses on 5% of the business days observed over an investment period will exceed the 95% VaR score. The VaR exceedance events must not only occur with the expected frequency but must also be independent of each other. Proper calibration of the market risk model that brings the dependency of the exceedance events to a low level is typically much more difficult than simple calibration of the exceedance frequency. But this is especially important in the times of financial distress, because underestimating the risk leads inevitably to underhedging, and, possibly, to more severe losses.

Many thanks to Sarah Khatry for reading drafts of this blog post and contributing countless improvement ideas and corrections.